Chapter 2: Defining Information¶

Credit: unknown

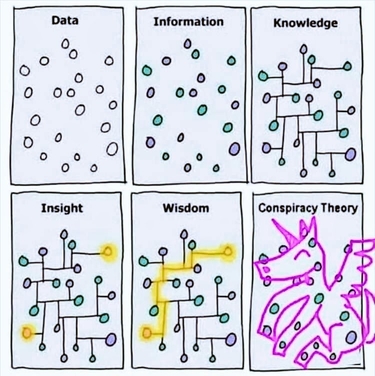

TL;DR: Information Science studies ways to tackle information overload. Humanity’s most recent answer to this age-old problem is a computerized and omnipresent information system that implies both great power and great responsibility.

Definition¶

Information Science, Retrieval or Theory?¶

While this course is officially called Information Science, it was originally presented to me as Information Retrieval, which is rather different. And then you might also have heard of Information Theory. So let’s have a look at these disciplines and how they relate to each other.

Information Theory¶

Wikipedia says:

Information theory studies the quantification, storage, and communication of information. It was originally proposed by Claude Shannon in 1948 to find fundamental limits on signal processing and communication operations such as data compression, in a landmark paper titled “A Mathematical Theory of Communication”. The field is at the intersection of mathematics, statistics, computer science, physics, neurobiology, information engineering, and electrical engineering. Its impact has been crucial to the success of the Voyager missions to deep space, the invention of the compact disc, the feasibility of mobile phones, the development of the Internet, the study of linguistics and of human perception, the understanding of black holes, and numerous other fields.

A well-known example of the application of information theory is data compression (both loss-less, e.g. ZIP files, and lossy, e.g. MP3s and JPEGs).

A key measure in information theory is entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a fair coin flip (with two equally likely outcomes) provides less information (lower entropy) than specifying the outcome from a roll of a die (with six equally likely outcomes).

Another interesting information theory principle that was recently in the news is Landauer’s Principle, which has to do with the limits of energy consumption in computation.

Information Retrieval¶

Information Retrieval relies on information theory for its methods, but it is a much more applied discipline. Wikipedia says:

Information retrieval (IR) is the activity of obtaining information system resources that are relevant to an information need from a collection of those resources. Searches can be based on full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds.

Automated information retrieval systems are used to reduce what has been called information overload. An IR system is a software system that provides access to books, journals and other documents; stores and manages those documents. Web search engines are the most visible IR applications.

An information retrieval process begins when a user enters a query into the system. Queries are formal statements of information needs, for example search strings in web search engines. In information retrieval a query does not uniquely identify a single object in the collection. Instead, several objects may match the query, perhaps with different degrees of relevancy.

An object is an entity that is represented by information in a content collection or database. User queries are matched against the database information. However, as opposed to classical queries of a database, in information retrieval the results returned may or may not match the query, so results are typically ranked. This ranking is a key difference of information retrieval searching compared to database searching.

Depending on the application the data objects may be, for example, text documents, images, audio, mind maps or videos. Often the documents themselves are not kept or stored directly in the IR system, but are instead represented in the system by document surrogates or metadata.

Information Science¶

Finally, Information Science is introduced on Wikipedia as follows:

Information science (also known as information studies) is an academic field which is primarily concerned with analysis, collection, classification, manipulation, storage, retrieval, movement, dissemination, and protection of information. Practitioners within and outside the field study the application and the usage of knowledge in organizations along with the interaction between people, organizations, and any existing information systems with the aim of creating, replacing, improving, or understanding information systems.

Historically, information science is associated with computer science, psychology, technology and intelligence agencies. However, information science also incorporates aspects of diverse fields such as archival science, cognitive science, commerce, law, linguistics, museology, management, mathematics, philosophy, public policy, and social sciences.

Some key concepts of information studies, as found in Ko’s Foundations of Information include:

information technology: any technology that serves to capture, store, process, or transmit information (e.g. the printing press, photography, …)

information system: a process that organizes people, technology, and data to allow people to create, store, manipulate, distribute, and access information (e.g. the postal services, a library, the Internet, …)

information behaviour or seeking: people seeking, managing, sharing, and using information across all possible contexts

sensemaking: iterative information seeking, involving not only gathering information, but making sense of it, integrating it into our understanding, and using that new understanding to shape further information seeking

information interface: the mediator between people and information systems (paper, audio, computers, …)

information architecture: the organization and structure of information interfaces, ideally optimized to help people use the interface to meet an information need (library system, search engine, …)

History¶

At the heart of all of these fields is the problem of information overload, which goes back a long way in intellectual history.

In a sense we might trace it back to Antiquity with Seneca the Elder (1st c. AD) who commented that “the abundance of books is distraction” or with Ecclesiastes 12:12: “of making books there is no end”. Indeed, compendia, anthologies, abbreviations and such became very popular in the Middle Ages as a way to manage information. However, with the arrival of the printing press information overload became truly problematic. We see people like Erasmus complaining about the swarms of new books and looking for ways to further organize, compile, index, … information in thesauri, encyclopaedias, etcetera. And of course, the arrival of digital carriers and finally the Internet have done nothing to mitigate this issue. Quite the contrary.

Although the study of humanity’s strategies to cope with information overload is well beyond the scope of this course, I would still like to indulge in one particular example, which, for us Belgians, is close to home.

It is the story of Paul Otlet and the Internet before the Internet. Otlet, born in 1868, was a Belgian architect and information pioneer. In 1904, together with Henri La Fontaine, he created Universal Decimal Classification (UDC), an information system which divides all knowledge into nine categories (with a tenth held open for expansion) such as linguistics, literature and mathematics or natural sciences, further broken down into 70,000 subdivisions. Updated and translated into 50 languages, the UDC is widely used today, especially in libraries, in 130 countries.

Yet, Otlet’s vision was much larger than bibliography alone. Together with La Fontaine, in 1910 he started planning a center that would hold all the world’s information in organized an accessible form, a veritable “city of knowledge”, called the Mundaneum. Its catalog drawers holding over 15 million paper index cards was only the start of this “world brain”, as Otlet’s revolutionary ideas went much further than that. He planned on using microphotography techniques to boost storage and even proposed a network of telescopes and TVs (a recent invention) in order to decentralize information access.

Otlet’s end goal seems to have been the idea of univeral knowledge, leading to universal equality, and thus, universal peace. Ironically, the First World War was one of many factors that contributed to Otlet’s plans remaining largely unfinished. Sadly, Otlet is an obscure figure today, even in Belgian history, although there is a small Mundaneum museum in the Belgian city of Mons, where some tiny fraction of all the world’s knowledge still resides on old index cards stored in wooden cabinets…

The study of humanity’s strategies to cope with information overload is well beyond the scope of this course, I would still like to indulge in one particular example, which, for us Belgians, is close to home.

It is the story of Paul Otlet and the Internet before the Internet. Otlet, born in 1868, was a Belgian architect and information pioneer. In 1904, together with Henri La Fontaine, he created Universal Decimal Classification (UDC), an information system which divides all knowledge into nine categories (with a tenth held open for expansion) such as linguistics, Literature and mathematics or natural sciences, further broken down into 70,000 subdivisions. Updated and translated into 50 languages, the UDC is widely used today, especially in libraries, in 130 countries.

Yet, Otlet’s vision was much larger than bibliography alone. Together with La Fontaine, in 1910 he started planning a center that would hold all the world’s information in organized an accessible form, a veritable “city of knowledge”, called the Mundaneum. Its catalog drawers holding over 15 million paper index cards was only the start of this “world brain”, as Otlet’s revolutionary ideas went much further than that. He planned on using microphotography techniques to boost storage and even proposed a network of telescopes and TVs (a recent invention) in order to decentralize information access.

Otlet’s end goal seems to have been the idea of univeral knowledge, leading to universal equality, and thus, universal peace. Ironically, the First World War was one of many factors that contributed to Otlet’s plans remaining largely unfinished. Sadly, Otlet is an obscure figure today, even in Belgian history, although there is a small Mundaneum museum in the Belgian city of Mons, where some tiny fraction of all the world’s knowledge still resides on old index cards stored in wooden cabinets…

Ethics¶

Something else which is beyond the scope of this course, is information ethics, the important question of right and wrong when it comes to information and data. Still, we cannot go by without at least briefly touching the topic.

Living in the Information Age is of course a wonderful thing. Most vital aspects of our life, health, work, communication, travel, … all rely on information. It started with newspapers, radio and TV. Then came computers, the Internet and mobile phones. Today, all of these media are interconnected in our smartphones, tablets and laptops. They are fed by big data and increasingly able through the use of artificial intelligence and machine learning.

While the possibilities of the Information Age can be immensely empowering, it is also clear that great power comes with great responsiblity. The flipside of our dependency of information is the emergence of phenomena like computer crime, digital dark age, cyberterrorism, digital divide, child pornography on the Dark Web, and many, many more.

Often information technologies are very ambivalent, and it is not easy to see when they cross the line. Two examples.

Virtual immortality¶

A South-Korean TV documentary gave a mother the opportunity to meet a digital version of her dead daughter after her body, voice and face were recreated using virtual reality technology. You can read more about it in these sources and also watch the footage on YouTube. Do you find the story beautiful or heartbreaking?

GPT-3¶

GPT-3, in full Generative Pre-trained Transformer 3, is a language model — a program that is, given an input text, trained to generate text, e.g. to predict the next word or words (thus writing a story) or to translate (just look at this example!). GPT-3 is one of the largest such models, having been trained on about 45 terabytes of text data, taken from thousands of web sites such as Wikipedia, plus online books and many other sources (definition from this Medium article).

The quality of the text generated by GPT-3 is so high that it is difficult to distinguish from that written by a human, which has both benefits and risks. One of the risks is that if there is a certain bias (eurocentric, sexist, racist, …) present in the data the model is trained on, this trend will be reflected in the texts produced by the model.

Some concrete examples can be found in these sources: